The Problem: You inherit a dataset with 50 columns of metrics. Your dashboard is slow, your storage costs are high, and your stakeholders are confused. Which metrics actually matter?

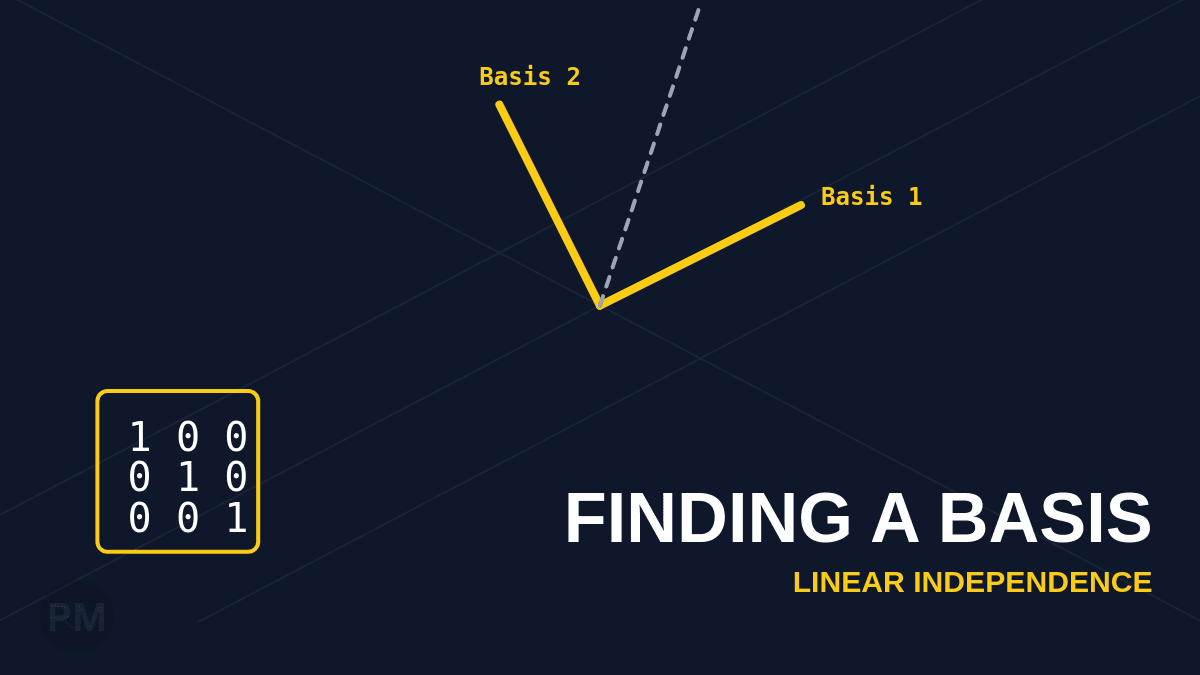

In data science, we often talk about "Feature Selection." In linear algebra, this is a Rank problem.

During my recent analysis of Coder Productivity data, I encountered a common issue: Redundancy. We had metrics like "Total Lines of Code," "New Lines," "Refactored Lines," and "Deleted Lines."

Are these 4 separate pieces of information? Or is "Total" just a linear combination of the others?

The Matrix Rank Test

Let's put our metrics into a matrix M, where rows are developers and columns are metrics.

% Columns: [New Lines, Refactored, Deleted, Total]

M = [

500, 100, 50, 650;

300, 50, 20, 370;

400, 200, 100, 700

];If we pay to store and process all 4 columns, we're wasting resources if one is dependent on the others.

We check the Rank:

rank(M)Result: 3.

Interpretation

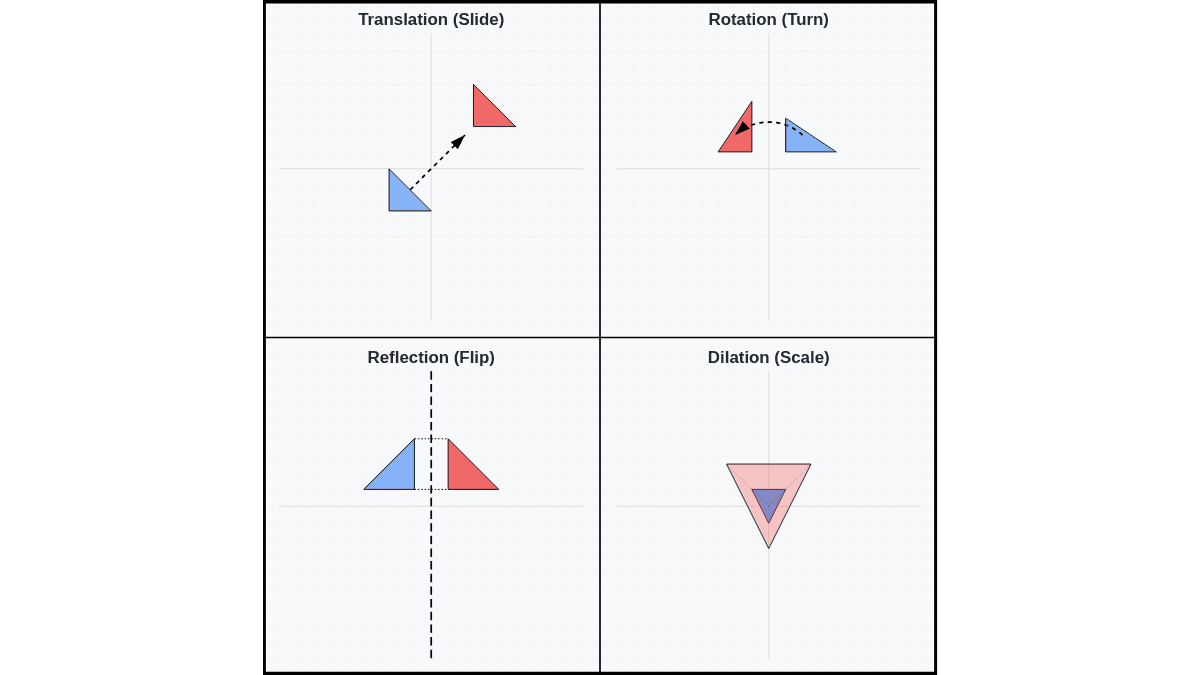

We have 4 columns, but the Rank is 3.

This proves mathematically that 1 column is redundant. It provides zero new information. It is linearly dependent on the other 3.

In this simple example, it's obvious: Total = New + Refactored + Deleted. But in complex datasets with "Weighted Productivity Scores" or "Efficiency Indices," these dependencies are often hidden.

Cleaning the Dataset

Once rank(M) reveals redundancy, how do we find the culprit? We look at the Null Space.

null(M, 'r')The output gives us the coefficients of dependency. If the output is [-1; -1; -1; 1], it tells us:

-1*New - 1*Refactored - 1*Deleted + 1*Total = 0

Or:

Total = New + Refactored + Deleted

This mathematical proof allows us to safely delete the Total column from our raw data storage, saving 25% of our space, knowing we can strictly recalculate it later if needed.

Why This Matters for Business

In my interview assessment, identifying these redundancies allowed me to:

- Reduce Data Noise: I presented 3 key independent drivers of productivity instead of 10 correlated charts.

- Simplify the Model: Paradoxically, removing data made the predictive model more accurate because it removed collinearity.

Using the Rank and Null Space techniques we learned in the classroom isn't just an academic exercise it's the first step in rigorous Data Science.